The results? Even the best models barely cleared 40% of that potential earnings pool, making it clear there are real promises and limits to Al’s current capabilities.

For technical and product leaders who wonder whether Al could turbocharge their own teams, these findings show that automated coding tools can spark impressive productivity gains — yet complex, multi-layered projects still need a human touch. Some tasks, like squashing routine bugs or evaluating straightforward proposals, can be done remarkably well by an Al. But once you hit deeper design questions or tricky, cross-file bugs, the technology often falls short.

AI vs. Freelance: Finding the Right Balance

It turns out that Al can make quick work of smaller, clearly defined tasks—like taking on repetitive bugs or generating targeted fixes—especially in spots that consume valuable developer hours. Models such as Anthropic’s Claude 3.5 Sonnet, OpenAI’s GPT-4o, and o1 show real-time savings by catching errors and suggesting quick patches. This frees your team to focus on higher-level strategy and creativity instead of constantly playing “bug whack-a-mole.”

That said, the study also highlights where these models struggle. Once a task’s scope expands — particularly projects priced above $1,000, which often require deeper analysis and system-wide changes — Al solutions tend to fall apart. Think cross-file debugging, multi-platform features, or tasks that call for loads of domain knowledge; these are precisely the challenges that still benefit from human engineers digging in and collaborating.

One more surprise: Al fared better at evaluating solutions than generating them from scratch. In management-style tasks—where the Al had to choose among several existing proposals—performance scores climbed higher than in pure coding. This suggests Al is great at sorting through multiple options and picking the winner. But if you’re looking for it to handle an entire feature build without much oversight, it might only take you part of the way. Still, with top models earning hundreds of thousands of dollars in simulated freelance tasks, there’s a lot of real-world potential for leaders who know how to balance Al automation with human oversight.

Turning Insights Into Action

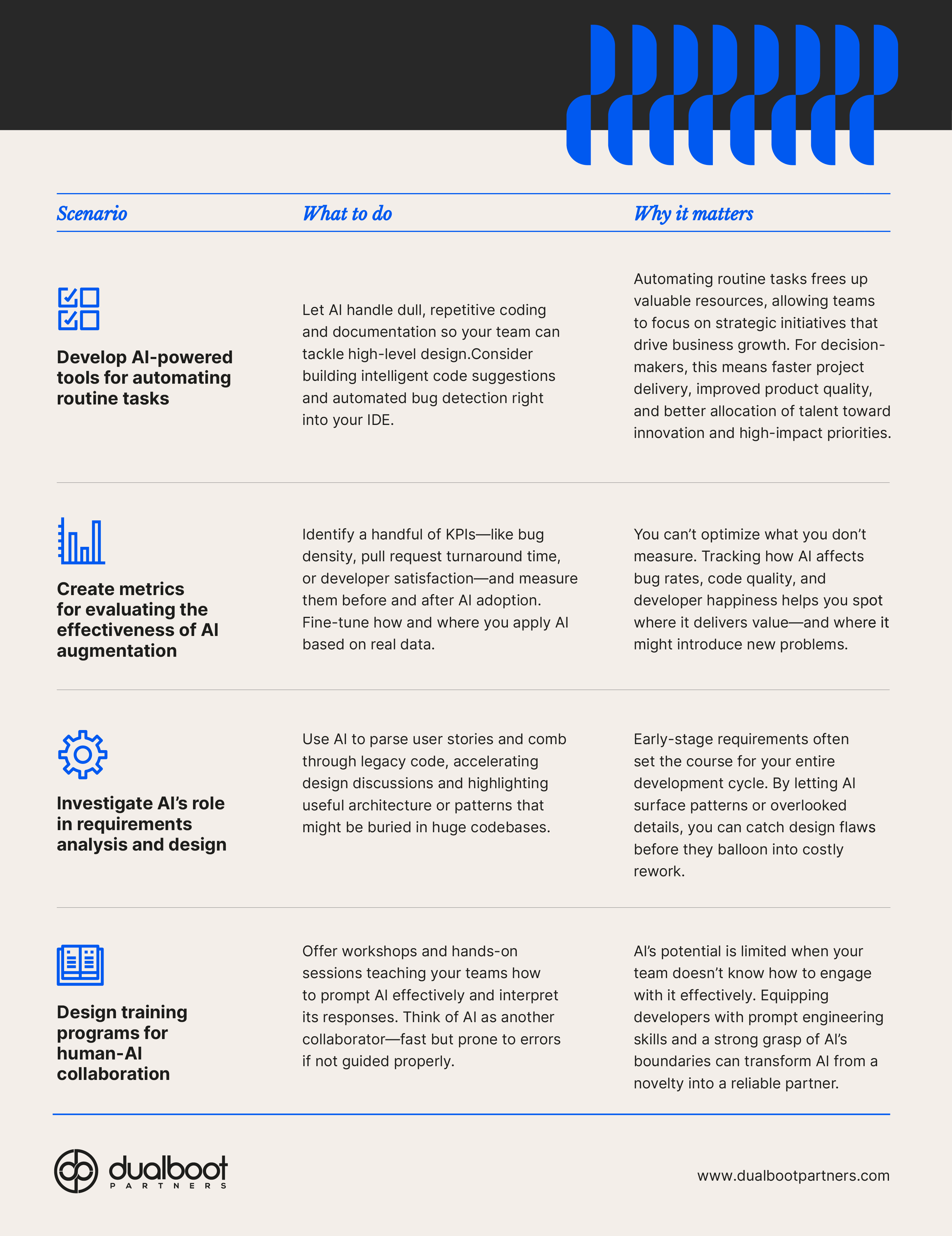

Maximizing AI’s impact on your business isn’t about replacement—it’s about collaboration. This framework highlights practical ways businesses can combine AI’s strengths with human expertise to deliver exponential impact.

Proving AI’s Value in Your Workflow

The SWE-Lancer study demonstrates that Al-based coding solutions can reduce grunt work, enhance feature velocity, and be a powerful decision-support tool. But for now, ambitious, multi-layered engineering projects still benefit from the creativity and nuance only experienced developers can provide.

Whether you’re looking to automate workflows, level up your design process, or create a truly responsible Al practice, these steps offer a roadmap. And if you’re aiming to transform immediate insights into long-term impact and start tracking the metrics that truly matter, get our free Al KPI Framework Worksheet.

Discover step-by-step guidance for measuring success in each Al scenario — so you can optimize performance and confidently show ROL.

Contact our Al experts to help you develop a custom Al strategy, integrate advanced tools effectively, and navigate ethical challenges. With the right mix of Al power and human expertise, you’ll keep your business both innovative and resilient for the long haul.

[iee_empty_space type=”vertical” height=”40″]